Abstract

Artificial Intelligence (A.I) is impacting our lives in a multitude of ways, from how we interact with technology on a personal level, to the enhancing the operations of businesses around the world. This paper explores the application of A.I., particularly: computer vision technology embedded in products by introducing related concepts of A.I. products. Secondly, it states some current challenges faced by A.I. products, proposing suggestions in regard to product design and product management for practitioners.

Key Words

artificial intelligence, computer vision, product design

1. Introduction

In recent years, the era of mobile Internet has gradually emerged into an era of artificial intelligence. People's lives are increasingly surrounded by artificial intelligence software and hardware. A.I. technology is quite clearly, gradually transforming the lifestyles and behavior of human beings. The past decade has witnessed immense technological progress, which has gathered pace and thus companies across sectors are increasingly harnessing the power of A.I. in their operations.

At present, the data types processed by A.I. technology include four categories: text, voice, image and video. Image and video are mainly achieved by computer vision technology. As one vital element of artificial intelligence, computer vision has developed rapidly with the support of algorithms, data and computing capacity and therefore has the feasibility of large-scale application. From a disciplinary perspective, computer vision is a cross-disciplinary subject thus encompassing computer science, mathematics, engineering, physics, biology and psychology.The root of artificial intelligence has, according to numerous scientists, stemmed from computer vision and this in turn has paved the path for the development of artificial intelligence. Artificial intelligence has been utilized across a broad range of sectors, to improve our quality of life, culminating in what we now see as a fast-growing collection of useful applications derived from this field of study (Le, 2018). Sectors including finance, transportation, healthcare, detection, household, and entertainment have all benefited from the highly advanced and specialist characteristics of A.I.

2. Some Definitions

There are in fact several different definitions of artificial intelligence and according toGordon, “Artificial Intelligence may be defined as a collection of several analytic tools that collectively attempt to imitate life.” Poole and Mackworth describe it as “the field that studies the synthesis and analysis of computational agents that act intelligently.” In general terms, artificial intelligence is the simulation of the human intelligence process by machines. It is a very profound concept and is also both a pioneering and cutting-edge discipline.

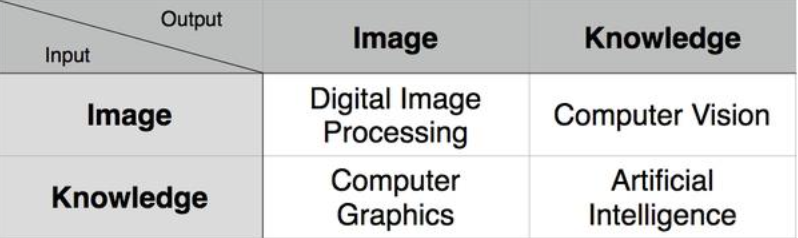

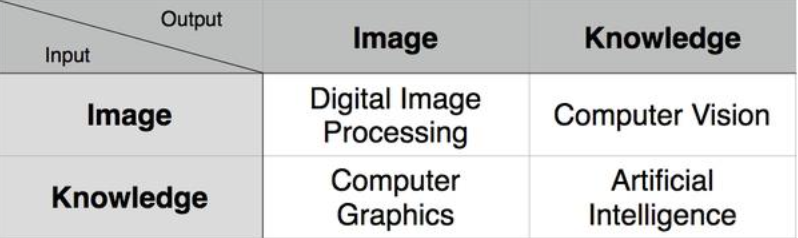

Computer Vision (C.V.). This can be described as “computing properties of the 3D world from one or more digital images” (Trucco & Verri, 2006). As Stockman and Shapiro define it as“to make useful decisions about real physical objects and scenes based on sensed images”. Different from Computer Graphics and Digital Image Processing, the input of Computer Vision is an image or sequence of images which usually are derived from a camera or video file. The output is the comprehension of the real world corresponding to the sequence of images, such as detecting faces and identifying license plates.

Computer Graphics (C.G.). The input of Computer Graphics is the description of computer generated image data created by specialist hardware and software, which produces a virtual scene. This is usually an array of polygons, and each polygon consists of three vertices, each vertex including three-dimensional coordinates, mapping coordinates, RGB and so on. The output is image, the two-dimensional array of pixels.

Digital Image Processing (D.I.P). The input of Digital Image Processing is image, and image is also the output, in this case. Applying a filter to an image utilizing Photoshop is typical of image processing. Common operations include blurring, grayscale, contrast enhancement, etc.

3. The application forms of A.I. in product

The epoch of human interaction with intelligent machines is coming. Intelligent machines continuously learn from human beings, which can only bring increased convenience to people's lives, forging a deeper and more precise cognitive ability. Intelligent assistance can also be employed to carry out further knowledge processing and integration. From a product forms perspective, A.I. has almost comprised all forms of computer software and at the very least primitive applications of A.I. have been both applied and integrated into numerous industries, resulting in further added expediency.

A plethora of cognitive technologies such as robotics, speech recognition, natural language processing, computer vision, machine learning and optimization. As previously discussed, the data types processed by A.I. technology mainly include four categories: text, voice, image and video. Thus this part will discuss the A.I. cognitive technologies corresponding to these datatypes, which are in fact, also the main application forms of A.I. in products.

Computer vision

Computer vision primarily handles high-dimensional and dense data, such as images and videos. Its degree of information extraction is profound; also application scenarios are most prevalent in the A.I. field. Additionally, computer vision is the most concentrated area of globalA.I. companies, with a high commercial maturity. These companies account for approximately40% of the total global A.I companies (Wallstreetcn, 2019). Computer vision is widely utilized in a diverse range of products from medical imaging analysis; (used to improve disease prediction, diagnosis and treatment); face recognition in Facebook (to automatically identify people in photos) and additionally it is adopted in security and surveillance to identify suspects. Object recognition is also employed by many companies who host online platforms as it can recognize items for more online purchase options.

Natural Language Processing

Natural language processing (N.L.P.) is multi-faceted, it depicts the human-like text processing capabilities possessed by computers, such as extracting meanings from texts and surprisingly, even interpreting meanings independently from readable, natural-style and grammatically correct texts. A natural language processing system may not necessarily understand the way humans process text, but it can manage text skillfully, with very complex and mature methods. For example, it can automatically identify all persons and places mentioned in a document, recognizing the core issue of the document, or extracting terms and conditions from amass of human-readable contracts and tabulating them.

A variety of technologies is incorporated in natural language processing, like computer vision technology, to help to achieve goals. For instance, it can establish language models to help to predict the probability distribution of language expression, which is the maximum possibility of a given string of characters or words which expressing a particular semantics. Specifically chosen features can be combined with specific elements of the text, in order to identify a paragraph and by recognizing these elements, a certain type of text can be discriminate from other words, such as junk mail and normal mail, for example. Classification methods, driven by machine learning will emerge to become the criterion in determining whether an email belongs to spam or not. There is an abundance of examples of the practical application of N.L.P.technology, including the analysis of customer feedback on a particular product or service, automatic discovery of certain meanings in civil lawsuits or government investigations, as well as the automatic writing of formulaic models or articles of corporate revenue or daily news(Indurkhya & Damerau, 2020).

Speech Recognition

Speech recognition focuses specifically on automatic and accurate transcription of human speech. Similar challenges exist here, that speech recognition has to overcome, akin to those posed with natural language processing. There are some complications in dealing with different accents, background noise and being able to distinguish homonyms and heteronyms.Simultaneously, it needs to maintain pace with the normal speed of speech. Speech recognition systems adopt some of the same technologies as natural language processing systems, supplemented by other technologies such as acoustic models which describe sound and its probability in specific sequences or languages. Intelligent voice assistants, medical dictation, voice writing, computer system voice control along with telephone customer service are just a selection of some of the principal applications of spec recognition currently available.

4. The application of computer vision in product

As the popularity of computers continues to grow, the technique of Computer Vision (C.V.) is rapidly developing. The pivotal task of computer vision is to process the captured images and video and be able to extract the corresponding scenario information from these. In many professional image fields, computer vision recognition has not only equal with but has in fact even surpassed human recognition levels in some instances, such as text recognition, QR code recognition, fingerprint recognition, face recognition etc. In terms of recognition efficiency, computers have undeniably, far surpassed human capabilities.

4.1 Core technologies of computer vision

Computer vision simulates human visual tasks by generating artificial models. Its essence is to replicate the process of human perception and observation (Sockman & Shapiro, 2001). This procedure involves a series of processes which can be understood and implemented in the artificial system. The core forms of computer vision tasks are as follows:

Object Detection

Object detection is the initial step of visual perception and thus a vital branch of computer vision. The function of object detection is to firstly mark the position of the object with frame and succeeding this, to identify the category of the object. Object detection is dissimilar to image classification. Detection places its focus on object searches, whilst object detection necessitates a fixed shape and contour. Image classification can in fact be an arbitrary target which may be an object or some attributes or scenes.

Object recognition

Computer vision is essentially designed to determine whether a group of image data contains a specific object, image feature or motion state. A typical characteristic of this technology is biometrics, including fingerprint, iris scanning and face matching. Apps such as Snapchat andFacebook utilize face recognition algorithm to recognize faces. There are however currently limitations to the length and breadth of the versatility of the technology’s application. So far, there is no single method that can be used to determine differing situations, across the board. Currently the existing technology can only solve specific target recognition, such as simple geometric pattern recognition, face recognition, print or handwritten document recognition and vehicle recognition. Also these recognitions not only need to be implemented into a specific environment, this environment must have specific characteristics such as illumination, background and target posture requirements. Currently, both object detection and object recognition are facing some significant challenges; including image occlusion, intra-class variation, scale variation, viewpoint variation, lighting conditions and background clutter (Mansouri, 2019).

Image Classification

Essentially, feature description of the image is the main research content of object classification, whether the image contains an object or not. In general, object classification algorithms describe the whole image globally, by manual features or feature learning methods and then use a classifier to determine whether certain types of object exist.

Image classification is the occupation that assigns labels to input images, which is one of the core matters of computer vision. This process is frequently interlinked and therefore inseparable from machine learning and deep learning. Currently, the most popular image classification architecture is convolutional neural network (C.N.N). Image classification is commonly used by numerous online stores to instinctively create categories for their product. An image classification algorithm has been employed by Airbnb, for instance, in classifying their rental offerings (Mansouri, 2019).

Image Segmentation

There are instances in image processing, when it’s necessary to isolate and split the image, in order to extract valuable parts for subsequent processing. These occasions can include filtering feature points, or partitioning parts of one or more pictures which contain specific targets. Image segmentation refers to the process of subdividing a digital image into multiple sub-regions (the set of pixels, also known as super-pixels). Image segmentation has the imperative role of simplifying the representation of the image, making the image easier to understand and analyze.More precisely, it is a process of labeling each pixel contained in an image, which causes the pixels with the same label, to have some common visual characteristics.

"Image Semantic Segmentation" is a pixel-level object recognition, that is to say each pixel will define its category, similar to other computer vision tasks and convolutional neural network has been very successful in segmentation tasks. This success has now been built upon and advanced with the birth of the Full Convolution Network (FCN), proposed by the University ofCalifornia, Berkeley. FCN proposes an end-to-end architecture of the CNN for intensive prediction without any full connection layer (Lee, 2018). The utilization of image segmentation is prevalent in many different fields including autonomous vehicles, detection of tumors or pathologies, satellite imaging recognition and manufacturing (Mansouri).

Object location

If image recognition solves “what”, object location solves “where”. Computational vision technology is thus employed to locate the position of object in the image. Object location is of significant importance to the application of computer vision in areas such as security and automatic driving. In the field of intelligent vehicles, computer vision is still the primary source of information in detecting traffic signs, lights and other visual features.

4.2 Application cases of computer vision

With regard to computer vision, there have been a vast number of trends that ebbed and flowed. One of the most outstanding trends is the explosive growth of practical applications.Computer vision technology already occupies the markets of mobile phones, personal computers and industrial detection. Yet the application of computer vision technology has evolved into many other fields such as automatic drive, intelligent healthcare, intelligent security, robots, unmanned aerial vehicle (UAVs), augmented reality (A.R.) and so on.

Face recognition

Facial recognition is the most widespread application in the field of artificial intelligence"vision and image". Facial recognition technology has been employed across various sectors including finance, justice, army, public security, frontier inspection, government, aerospace, power, factory, education, medical and many other industries. Facial recognition technology is undoubtedly in great demand in China and demand driven enterprises are keen to invest. At present, the technology has the capabilities and potential for large-scale commercial use and this will inevitably rise rapidly in the next three to five years. This year, the technology is anticipated to explode in the field of Finance and security.

Facial recognition technology has three core conditions of application. An application scene is 1:1 authentication that proves whether the information of the certificate and the person is unified, which is largely used for real-name verification. The second is 1:N certification, which determines whether a person is a member of a particular group, commonly used for personnel access management and urban security. The third application is living human detection which is implemented to ensure that the real person is operating the business and thus authorizing the account.

As an example, as early as 2015, the unicorn company Face++, was producing scanning technology. At the opening ceremony of the world-renowned Hanover Consumer Electronics,Information and Communication Expo (CeBIT), in Germany, Alibaba Chairman Jack Ma demonstrated the “Smile to Pay” technology of Ant Financial. This face-paying function was developed jointly by Ant Financial and Face++, the latter, having long been a global leader in artificial intelligence, particularly in the field of facial recognition.

The product market coverage of Fintech, O2O travel, intelligent retail and other fields exceeds 80%, with more than 30 million requests being processed daily on intelligent open platforms. Among these, more than 40% of the users are from overseas and client developers derive from over 200 countries. Currently, finance is the deepest field Face++ is working on and this is also the most widely distributed. The most commonly known business is its collaboration with Ant Financial. As the leading online payment platform in the world, Alipay uses Face++'s face authentication solution to not only consistently seek to improve user experience but also to improve transaction security.

Intelligent drive

With the popularity and necessity for the automobile market, automobiles have become a very large application direction of artificial intelligence technology. Defined levels of intelligent drive are inclusive of driver only, assisted (e.g.: park assist), partial automation (e.g.: traffic jam assist), conditional automation (e.g.: highway patrol), high automation (e.g.: urban automated driving) and full automation (e.g.: full end-to-end journey) (Ramos, 2016). Employing artificial intelligence technology makes the functions and applications of automobile driving assistance ever more possible. Most of these applications are based on computer vision and image processing technology. At this stage however, there is still a considerable journey in advancement to make before technology is mature and able to fully realize automatic driving/unmanned driving.

Medical imaging

Over 90% of medical data is derived from medical images and currently, the field of medical imaging has a vast amount of data available for in-depth learning. Medical imaging diagnosis is invaluable; it can assist doctors in healthcare settings and can support them to improve their diagnostic efficiency. In April 2015, IBM set up the Watson Health division and penetrated the medical industry. Only four months later, IBM announced a $1 billion acquisition of MergeHealth Care, a medical imaging company, merging it with the newly established Watson Health.Six months later, IBM paid an additional $2.6 billion for Truven Health Analytics, a medical data company. Shortly after, Watson Health announced IBM's first cognitive imaging product, WatsonClinical Imaging Review, at the HIMSS 17 conference, which analyses medical data and images, to support medical service providers to identify the most critical situations that need to be addressed (Grand, 2017).

Video surveillance

Artificial intelligence technology has the capacity to search and query the video content information of structured people, cars and objects quickly. This application makes it plausible for the public security systems to search and locate criminals in complex surveillance videos. This technology is also widely utilized in prevention, crowd analysis, control, and also for detection of early warning in traffic hubs, for instance, where a large volume of people are moving. Video /surveillance has a broad scope of profit margins and business models, which can offer industry-wide solutions as well as sales of integrated hardware devices. Artificial intelligence companies are seeing a trend in the application of technology in video and surveillance and consequently, this technology will command the application in security, transportation and even retail industries.

Image recognition

Admittedly, fewer volumes of enterprises are employing artificial intelligence in the form of image recognition and analysis, notably this is less than was anticipated. There may be several reasons for why this has not occurred; presently, the profit margin of video surveillance direction is considerable and many enterprises, for this reason, pay attention to the field of video surveillance; also facial recognition is an application scenario of image recognition. Most enterprises that employ facial recognition also provide image recognition services, however the sales effect is poor as the major profit point is still face recognition. Subsequently, most commercial scenes of image recognition belong to the Blue Sea, and their potential needs to be developed. Finally, picture data is mostly mastered by large Internet companies, hereby, data resources of start-up companies are scarce.

5. Challenges of Computer Vision Application

Technical challenges

To date, computer vision has undergone 60 years of development; however, there are only a small selection of large-scale mature applications, such as fingerprint recognition, license plate recognition or face detection. This is owing to the technological limitations, yet in recent years, with the application of deep learning in the discipline of computer vision, many technologies have made momentous advancements. It has to be acknowledged that there are still many technical challenges that will need to be conquered.

For example, within ILSVRC 2016, (one of the most pivotal competitions in the field of vision), the ImageNet Competition is held once a year. The Object Detection is an object detection task and the optimal result is that the MAP reaches 0.663, which essentially means that the computer automatically produces rectangular frames of the outer edges of various objects in the image. The average accuracy of this result is approximately 66% (UNC Vision Lab, 2016).This result actually represents the highest level in the world, but it must be acknowledged that such a result can only be used in certain scenarios, where the accuracy requirement is not particularly high and consequently it is not yet proximal to large scale application.

Simultaneously, at the application level, we ultimately want to achieve user value. On the one hand, visual technology may not be sufficient but some may perceive that there is no need to wait for visual technology to mature any further, before it can be used. It simply means that owing to the immaturity and imperfection of visual technology, it must be combined along with other technologies and thus must be combined with product application, in order to ensure an adequate value of application in visual technology.

Ethical challenges

When A.I. is created within enterprise, it has to make numerous decisions. This encompasses the impact of privacy regulation policies, as well as legal, ethical and cultural aspects. Frequently, despite the team behind the product, commencing the process with good intent, there is still the possibility that the final product could be unethical. Kate Crawford, Co-Founder of A.I. Now explains if the team behind an algorithm are not themselves from a diverse background, it risks the whole intention of the product and may cause “unintentional biases”. As Crawford goes on to explain “often when we talk about ethics, we forget to talk about power. We're seeing a lack of thinking about how real power asymmetrics are affecting different communities.” (Re-Work,2018).

Facial recognition therefore could be a form of A.I that could be susceptible to such bias.Researchers at Nvidia deployed a new generation algorithm in 2018 to create realistic faces using a generation antagonism network. In their system, the algorithm can also adjust various elements, such as age and freckle density. A particular software that can automatically transfer the actions of one person in one video to those of another person in another video was developed by a team at the University of California, Berkeley (Tangermann, 2018). Essentially, we have, for sometime now, been training computers to watch videos and anticipate the corresponding information of the physical world in order to understand interaction methods in the real world. This behavior has nonetheless, led to an increasing number of AI scams, such as false videos and false images.In the future, this problem will inevitably become more acute.

Facial recognition technology has over recent years, been challenged by the law.According to ‘2019 Tech Trends Report’ from the Future Today Institute, in 2017, a federal judge passed a class action lawsuit against Shutterfly, who allegedly violated the Illinois BiometricInformation Privacy Act by not having written permission to collect people’s biometric data.Similarly, Facebook also faced a lawsuit filed by residents of Illinois who believed thatFacebook’s identification of their faces in the platform’s photo tagging function, also violated this law.

6. Suggestions for practitioners

A.I. has unquestionably managed to improve productivity and it has and can do this admirably. The dramatic changes of scenes have upgraded the interrelation mode of A.I. from a traditional graphic user interface to a more human-computer-interaction mode which is more proximal to natural human behavior, returning to human's instinctive experience (Du, 2017). In terms of future product design, no longer will simple logical thinking be adaptable and also the proportion of emotional factors will rise sharply.

Products therefore need to effusively explore the core experience behind the interface instead of remaining at the interface layer. For product practitioners, pure professional skills may not be sufficient to enhance product capability. It is necessary therefore, to scale horizontally and understand technology on a more profound level, to include software and hardware technology.On the other hand, it is also important to fully comprehend and master data application. There have been proposals and suggestions for how to improve product experience through A.I. at the level of implementation.

Design with not for artificial intelligence

The level of intelligence of A.I. in most areas is in fact just “weak A.I.”. General computer vision technology is difficult to mature in the short term. Only research institutions and institutes passed a class action lawsuit against Shutterfly, who allegedly violated the Illinois BiometricInformation Privacy Act by not having written permission to collect people’s biometric data.Similarly, Facebook also faced a lawsuit filed by residents of Illinois who believed thatFacebook’s identification of their faces in the platform’s photo tagging function, also violated this law. of large companies have the ability to conduct large-scale application and technology research.For ordinary companies that design and develop products, what should be done is to focus on the implementation of product through A.I. rather than investing in A.I. development for the sake of creating A.I. (Sappington, 2019). From the perspective of industrial chain, only deep into the application scenario can form a closed-loop and access data, and only with business and data, can the company occupy a place in the market and form a real "moat".

If the result of machine learning was inaccurate, do product designers provide users with convenient "non-intelligent" ways to help them solve the problem? When designing an A.I.product, designers should always base their development on the worst-case consequence of machine learning. This contingency plan is just as imperative as the initial, primary plan and in fact is often even more crucial because once a product user has a disappointing experience or feels frustration with the product, it’s easy for them to give up on the product entirely. Once this occurs, it is a very difficult situation to back track from. Subsequently it is clear that if there is insufficient confidence in machine intelligence, it is better to therefore, temporarily skip the development of certain functions and proceed, as observed by Sappington. A safe and effective way is to produce a minimum variable product (MVP), thus in this sense, “less is more”.Challenges will still obstruct designers such as how to clearly convey the benefits of "smart" to users and how to provide effective solutions for errors that may occur at any time.

Reduce user threshold and build user trust

Mandatory tasks should be simplified as much as possible prior to users utilizing the product.By providing personalized content for users is a very common application scenario of A.I.technology, hereby, understanding users’ basic information is often the basis of personalized content. Obtaining user information sounds simple enough, yet it is certainly not easy to do. If the acquisition of initial data is compulsory and onerous and moreover, users do not see the benefits of providing their data, then "training artificial intelligence" for users feels like"voluntary labor" - something to be avoided in product design (Du, 2017). Therefore, for the user's efforts, we should try to gain timely feedback. The design of this feedback mechanism inA.I. products is akin to that in game design. What we need to construct is a positive, rhythmic and short cycle of; first task - > timely feedback - > motivating users to complete the next task.

The core of a product is essentially a means to meet the needs of users. In order to build user trust it is imperative to start with small details. Fundamentally, trust amongst people is built on the basis of time and cooperation, hereby people and machines can be considered in the same way. Both require high frequency and a low error rate, this is the entry point that all A.I. products should strive to seek at this stage. Compared with traditional products, A.I, products are facing higher challenges in different stages of production (Li, 2018). If the technology is advanced but presents a high barrier of entry for users or fails to deliver product value directly, the product will not succeed.

Train and Supervise AI in proper way

As mentioned above, in some cases, A.I. technology has demonstrated some distinct flaws such as racial discrimination within facial recognition, unfair rejection of personal loan applications, misidentification of users' basic information and so on. Artificial intelligence is becoming more and more popular, but existing regulators haven’t begun to conduct comprehensive supervision. These applications should be identified as early as possible and regulators should be identified to achieve regulatory objectives. We must recognize that the purpose of laws and regulations is to protect human beings from harm.

A.I. must be taught and qualified to deal with the real dilemma facing humanity. At the same time, A.I. training needs to be both supervised and monitored to ensure that A.I. does not make harmful decisions. In this process, we need to primarily consider justice, accountability, transparency and human well-being, as well as resisting hacker attacks and violations of data.The impact of A.I. systems on practical application scenarios should be standardized, such as the safety of self-driving vehicles. This should therefore replace attempting to define and control certain concepts in an unshaped and rapidly developing A.I. domain.

References

Du, Song. " Four Basic Questions for Product Managers in the Age of Artificial Intelligence ".Woshipm.Com, 2017, http://www.woshipm.com/ai/809632.html.

Grand, Anne. "Introducing IBM Watson Imaging Clinical Review". IBM, 2017,https://www.ibm.com/blogs/watson-health/introducing-ibm-watson-imaging-clinical-review/. Accessed 11 May 2019.

Gordon, Brent M. Artificial Intelligence. Nova Science Publishers, 2011.

Heaven, Tom. "The Difference and Relationship among Computer Vision, Computer Graphics,Digital Image Processing- CSDN Blog". Blog.Csdn.Net, 2019,https://blog.csdn.net/hanlin_tan/article/details/50447895.

Indurkhya, Nitin, and Frederick J Damerau. Handbook Of Natural Language Processing.Chapman & Hall/CRC, 2010.

Le, James. "The 5 Computer Vision Techniques That Will Change How You See The World".Heartbeat, 2018, https://heartbeat.fritz.ai/the-5-computer-vision-techniques-that-will-change-how-you-see-the-world-1ee19334354b.

Li, Te. “Artificial Intelligence Product Manager Series (9) Necessary Conditions for the Successof AI Products”. Zhihu zhuanlan. May 5, 2018. https://zhuanlan.zhihu.com/p/36871066

Linda G. Shapiro and George C. Stockman. Computer Vision. 1st ed., Prentice Hall PTR, 2001.

" AI Vision and Voice: Artificial Intelligence ‘View’ ‘Listen’ Feast ". Wallstreetcn, 2019,https://wallstreetcn.com/articles/3498067.

Mansouri, Ilias. "Part 2: Overview Of Computer Vision Methods". Medium, 2019,https://medium.com/overture-ai/part-2-overview-of-computer-vision-methods-69c56843c567.

Poole, David L, and Alan K Mackworth. Artificial Intelligence : Foundations Of ComputationalAgents. Cambridge University Press, 2010.

Re-Work. White Paper: The Ethical Implications Of AI. 2018,https://www.prweb.com/releases/2018/05/prweb15477008.htm. Accessed 10 May 2019.

Ramos, Sebastian. "Materials - Tutorial On Computer Vision For Autonomous Driving".Sites.Google.Com, 2016,https://sites.google.com/site/cvadtutorial15/materials?spm=a2c4e.11153940.blogcont228194.8.eb851fdb3Op8wK.

Sappington, Emily. "Interaction 19 – 3-8 February 2019 • Seattle, WA". Interaction 19, 2019,https://interaction19.ixda.org/program/talk-designing-for-ai-emily-sappington/.

Trucco, Emanuele, and Alessandro Verri. Introductory Techniques For 3-D Computer Vision.Prentice Hall, 2006.

Tangermann, Victor. “Look at These Incredibly Realistic Faces Generated By A Neural Network”.Futurism, Dec. 13th 2018.https://futurism.com/incredibly-realistic-faces-generated-neural-network

UNC Vision Lab. “Large Scale Visual Recognition Challenge 2016 (ILSVRC2016).”ILSVRC2016, 2016, image-net.org/challenges/LSVRC/2016/results.